It's hard to believe, but since just under a month ago I have been over 30 years of age. But so too has the world wide web.

I was discussing this yesterday with someone and discussing how the w3 has evolved from being a simple document sharing system into something that allows you to build and use powerful applications within it. I say this because on Monday I went to one of my favourite restaurants and they use a web app to manage everything, and I say everything. It's very impressive.

Anyway, take a look at How To Geek for more information on this:

https://www.howtogeek.com/744795/the-first-website-how-the-web-looked-30-years-ago/

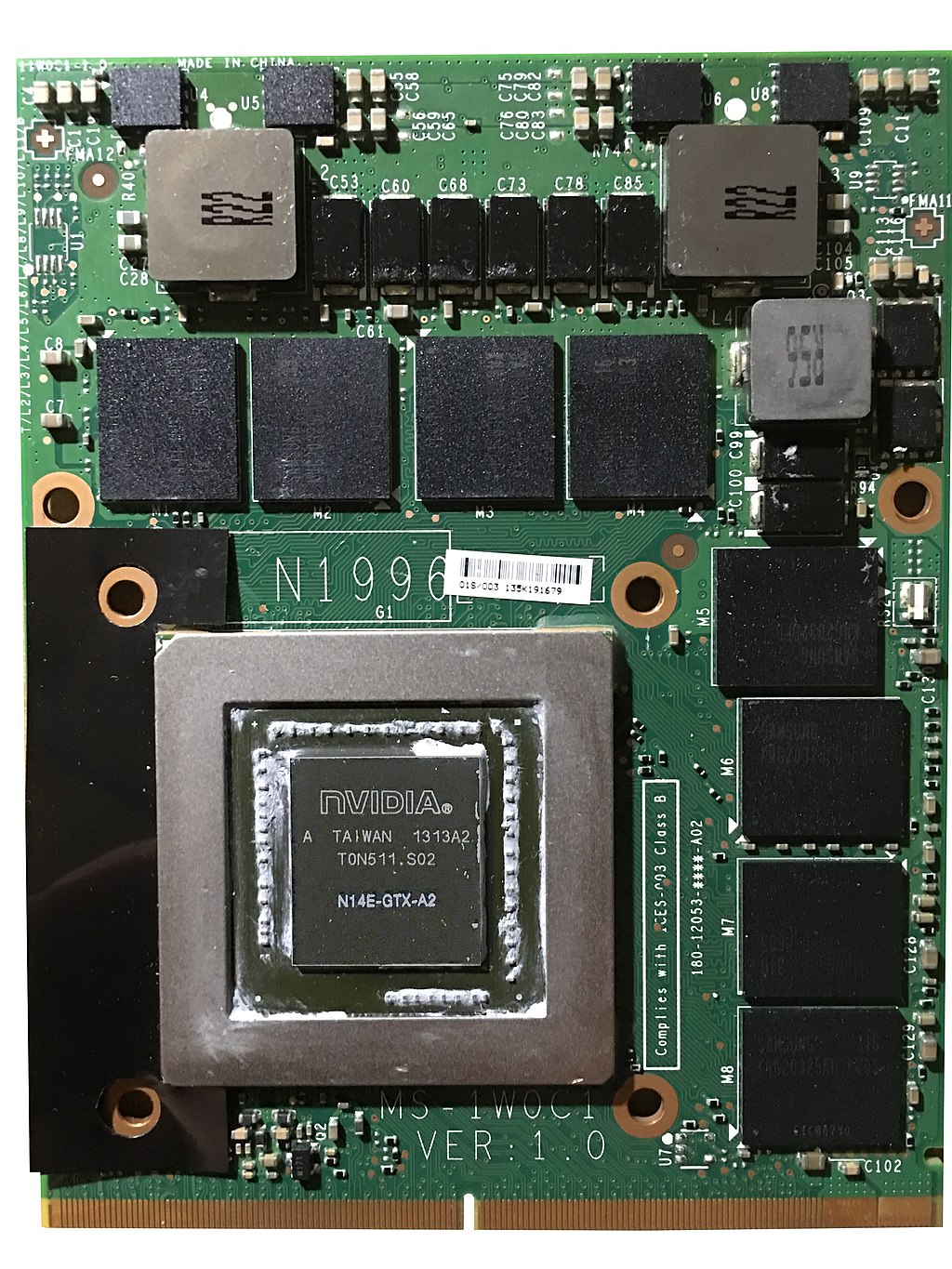

MXM or Mobile PCI Express Module is one of the most interesting standards that I actually found out about in 2005 when attempting to fix a laptop for a friend (Calum). His Fujitsu Amilo 3438 featured a removable GPU - something I had never seen. When I got asked to try to repair it I was honestly astonished at how easy it would be to do this.

Unfortunately, it didn't end as nicely as it should have and it seemed that the laptop was beyond repair. I am happy that I got to have a chance at this though because it brought my attention to the MXM standard.

By observing that MXM was a standard and one that was a very good idea in practice, I have for a very long time been a fan of it.

As someone who believes strongly in the Right to Repair, MXM may have a big part to play in the future, but what do the companies behind the development of systems that could incorporate MXM actually think?

What is MXM?

MXM is one of those really brilliant ideas that is unfortunately brought down by the manufacturers of computer systems. It is no doubt more expensive to follow the route of adding in replaceable graphics cards to a system compared with permanently soldering them, but it's also less profitable in the long run and this is my main concern.

The MXM standard is designed to provide owners with the ability to upgrade their systems at a later date or replace parts when they stop working. But the problem with this idea is, at least in the eyes of the manufacturer, that the customers will stick with the same computer system for longer rather than upgrading it regularly.

Another major issue with MXM is that it takes more room than a soldered GPU and therefore doesn't allow for incredibly thin designs of laptops (like the MacBooks where Apple sacrifices everything to get thinner and thinner computers).

MXM cards being a detachable component in the system are also more likely to fail due to connector failure. This is far less likely in soldered GPUs.

But even with all of these problems, MXM still eliminates one major concern that should be more prominent now than ever - the environmental impact. It concerns me that we have become very wasteful with computers with soldered memory and storage drives (like my old MacBook Pro and now my current MacBook Pro). Soldered GPUs basically mean that when the GPU decides to pack it in the whole system stops working. I've had this on numerous computers. MXM allows us to replace a broken GPU or upgrade an old one, bringing a new lease of life into the computer. From a purely environmental point of view, this would be amazing.

With these new laws being passed, surely the time is right for MXM to take to the centre stage?

This is a very interesting read on why Apple's M1 chip is so powerful.

As someone who loves computer hardware, I found this article a very interesting reminder of the benefits of RISC over CISC and the benefits of CISC over RISC architectures.

https://medium.com/swlh/what-does-risc-and-cisc-mean-in-2020-7b4d42c9a9de

Hello folks, I hope everyone is okay and not as fed up as I am with everything going on in the world at present!

Today I was thinking about something that I use every day - switchable graphics. See in my MacBook Pro I have an Nvidia 750GT - a decent dedicated graphics card that can run some games well but has never been used for gaming. In fact, my dedicated card only gets used for graphic and video editing on my MacBook and therefore remains cool and quiet using the Intel Iris Pro graphics. The fact that my computer automatically switches without me even noticing (other than the notification I have setup) is quite truly amazing.

So, what about switchable memory? Have a low power implementation such as LPDDR3 and then high-performance DDR4 as the fast memory. It wouldn't be a particularly good idea in smaller laptops but in a laptop such as 15" machine such as my MacBook Pro it might be an excellent idea. Something like this could help a device such as the Nintendo Switch, a lower power RAM for on the move then a powerful implementation that would be activated when it is docked.

Of course, there are issues with this concept of switchable memory. The main one that comes to mind is how do you keep them in sync? If one memory is to be turned off, you need a fast bus or lane to transfer the data from one memory module or type to the other. This could also end up using a lot of power.

This is a just a thought...

Visual Basic was the first language that I learned all the way back in my early teens. I built some amazing things with it including my own Photoshop program and a web browser with many big features. But now Microsoft has declared the end for it.

Read more below:

https://www.thurrott.com/dev/232268/microsoft-plots-the-end-of-visual-basic

I was talking with a friend the other day there about the topic of limiting the frames per second (FPS) in a video game when playing on your PC. The argument didn't end with a much clearer understanding than was originally the case and neither side won the argument.

Many people do not realise how important limiting the FPS in a video game can be for performance. Think about it this way, every piece of information a computer's graphics card needs to produce is more work for the computer.

If a computer monitor refreshes at 60Hz (around about 60 frames per second) then running 120 frames means that 60 of those frames are wasted. This is, technically, how V-sync works as well. Limiting the FPS allows the GPU to work on the next few frames without filling the GPU doing overtime.

What this means is that your computer, which could be doing other things like calculating positions of units in a game or something, cannot be done until those frames have rendered. This is wasted CPU and GPU resources.

So go on, try limiting your frames per second.

Long story short, this has been a detrimental year for Intel and a colossal win for AMD. For myself, however, the story isn't as simple as one or the other. My first AMD machine was a Turion 64 X2 back in April 2007. The machine itself was fine, it got a little toasty from time to time and it certainly couldn't play many games of its time and it eventually broke down three years after purchase. AMD had let me down.

But way back in 2005, I was always very skeptical of the performance of my beast (but only in physical size) of a laptop that was supposedly a desktop replacement when compared with the AMD powered version. The Athlon XP and Athlon 64 versions often outperformed the Pentium 4 version and although AMD CPUs were perhaps better than Intel's at that time, AMD didn't have the market.

Things got doom and gloom in 2010 onwards for AMD. AMD had definitely lost grip of the market. But as my blog followers will have noticed, I now keep banging on about AMD Ryzen and how it's the current reigning champion in the CPU market, with Intel struggling to keep up.

So where am I, a long-term Intel fan who has finally switched to AMD for the first time in over a decade?

I would say that I am on the boundary edge of switching to AMD for my other computers. Every innovation that they add to their chips such as the recent PCI-E Gen 4 makes me want to stay with AMD more. Performance on my PC is much higher than before, especially saying as I paid less than I have ever done for my PC this time around.

However. AMD's lack of Thunderbolt, particularly in the laptop segment, means that I cannot connect some of the peripherals that I own to my computer. Uh oh.

So what do you think? Team Red or Blue?

For a long time I have been in and out of Microsoft's smartphone ecosystem with me buying my first Microsoft Windows powered device back in 2005 when I was 13. Back then they were called Windows Mobile phones.

I got my first Windows Phone, a HTC HD7, in 2011, and it feels like a lifetime ago. It was then that Microsoft announced that Windows Mobile was to be replaced by this new, more sleek and modern operating system known as Windows Phone 7. At first it was a great operating system, mainly because it was different to the competition, but within no time at all, I started to see the err in my ways choosing a device powered by Microsoft's operating system. Months into my Windows Phone 7 device there was still no Facebook, and half of the other most useful apps had no intention on coming. The big update known as Windows Phone Mango was supposedly bringing sweeping changes that would improve the device but it was a long wait for something that you weren't even sure would fix the issues.

Microsoft entered a market controlled by two large companies who had actually been their rivals in other markets before now, Apple and Google. Microsoft's corporate business model was their only strength here; the other two were focused on the overall dominance of the smartphone market, whereas Microsoft, with the Office brand amongst other things, could focus on making their devices more suited to the enterprise market.

Unfortunately for Microsoft, they actually went down the route of trying to sell their phones to the average user. This created a variety of different problems for Microsoft because instead of focusing on their enterprise market, they had to cater to everyone, much like how Apple and Google did with iOS and Android. This made them just another smartphone operating system manufacturer, and they lost their own identity trying to copy ideas from their competitors.

My HD7 was the only smartphone I have ever paid to get out of early, simply because the operating system was so bleeding awful. The phone itself was actually really good however.

Windows Phone 10 came out and it's release was a surprise to me, as I had thought by that point Microsoft might have realised that there was no point in continuing with something as dreadful as it. Microsoft even went as far as to buy the Lumia line from Nokia and tried to market them as Microsoft phones.

Nothing worked for them. Windows 10 Redstone 2 was released as a big update and a promised Surface Phone was rumoured. People actually thought that it had a chance of becoming something, but no. Nothing came of it, and this article that I have written was inspired by another, which also talks about how the fate of Windows Phone is a sad one.

Shortly after I built my latest PC, the Red Revolution, AMD released their Radeon VII cards. These were designed to compete with Nvidia's stiff competition that just gets stronger and stronger. The main focus of these cards were Nvidia's RTX 2080 cards which have allowed Nvidia to hold top dog position in the GPU market, with AMD more focused on the budget builder, or those just looking to save a bit.

AMD haven't done that as of yet, and the RX5xx series are a bit dated for someone who, like me, is building a new PC. My card in my gaming PC is an AMD Radeon HD 7950 and it's well and truly dated. But I've got brand loyalty, I've always gone for ATI/AMD cards as long as I can remember, because there was once a time when ATI cards were superior in many ways to Nvidia cards with the latter having troubles with overheating and the former having trouble with software.

It's time AMD launched their next generation of graphics cards. Mainstream cards are my focus, I had a high end one with this one and I probably could have just stuck to mainstream, as it replaced a Radeon HD 5670 which was extremely mainstream.

I'm holding out for AMD's next generation of cards in the hope I can get a mid-range card for a lot less than what I paid for my 7950, but I'm not impressed.

Does anyone know what's going on with the next generation of cards?!